What Is the Function of a Graphics Card?

What Is the Function of a Graphics Card? Pixels are small dots that make up the images that see on your display. A screen may display over a million pixels within most typical resolution settings, and the computer must determine what to do with each one in order to form a picture. To achieve this, it requires a translator – something that can take binary information from the CPU and convert it into a visible image. Until a computer has built-in graphics capabilities, such translation occurs on the video card.

The task of a graphics card is complicated, yet its concepts and components are simple to grasp. In this post, we’ll look at the fundamental components of a graphics card and also what they accomplish. In this post, we’ll look at the fundamental components of a graphics card and also what they accomplish. We’ll also look at the aspects that contribute to a fast, effective graphics card.

Consider a computer to be a firm with its own faculty of arts. When employees desire a work of art, they submit a request to an art department. The art department selects how to make the picture and then prints it. As a result, someone’s concept becomes a real, observable image.

The same rules apply to graphics cards.

The CPU, in collaboration with software programmes, transmits picture information to the video card. The graphics card determines how to utilise the pixels on the screen. It then transmits the data to the screen through a wire.

Creating a picture from binary code is a difficult task. The graphics card initially constructs a wire frame from out straight lines to produce a 3-D image. The picture is then rasterized . It also improves the light, smoothness, and colour. For quick games, the computer must repeat this procedure around sixty times each second. The burden would be too heavy for the cpu to manage without the need for a video card to do the necessary computations.

This is accomplished by the graphics card’s four primary components:

- A processor that determines what each pixel just on screen should do.

- Memory for storing pixel information and temporarily storing finished images

- A monitor connection to view the final outcome

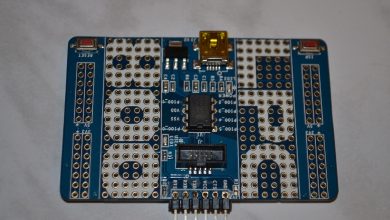

A graphics card, like a motherboard, is an electronic circuit board which houses a cpu and RAM. It also features an input/output scheme (BIOS) chip, which retains the card’s settings and runs memory, input, and output diagnostics during startup. The processor in a graphics card, known as a graphic processing unit (GPU), is analogous to the CPU in a computer. Some of the most powerful GPUs have more pixels than a typical CPU.

A GPU utilises sophisticated programming in addition to its own processing capacity to help it analyse and utilise data. ATI and nVidia manufacture the great majority of GPUs just on the market, and both firms have created their own GPU performance upgrades.

Processors utilise the following techniques to increase image quality:

- Full scene anti-aliasing (FSAA) is a technique for smoothing rough edges of 3-D objects.

- Inhomogeneous filtering (AF), which improves image clarity. Each manufacturer has also created unique approaches to assist the GPU in the application of colors, shading, textures, and patterns.

- As the GPU generates images, it needs a location to store information and final images. It does this by storing data on each pixel, its color, and its placement on the screen in the card’s RAM. A portion of the RAM can be

- Some cards include several RAMDACs, which can boost performance and accommodate multiple monitors. More information on this method may be found in How Digital and Analog Recording Works.

- The RAMDAC transmits the finished image to the display through a cable. In the next part, we’ll look at this connection as well as other interfaces.

Since IBM developed the first graphics card in 1981, graphics cards have gone a long way. The card, known as a Monochrome Display Adapter (MDA), enabled text-only presentations of green or white characters on a black screen. Video Graphics Array (VGA), that supports 256 colours, is now the minimum requirement for new video cards. Video cards may show millions of col’

ours at resolutions of up to 1080p thanks to high-performance standards such as Quantum Extended Graphics Array (QXGA).PCIe also allows for the simultaneous use of multiple graphics cards within the same machine.

MONITORS

Most graphics cards support two monitors. Typically, one has a DVI connector for LCD panels and the other is a VGA connector for CRT screens. Instead, some graphics cards include two DVI ports. However, utilising a CRT screen is not out of the question; CRT screens may be connected to DVI ports using an adaptor. Apple used to manufacture monitors that utilised the proprietary Apple Show Connector (ADC). Despite the fact that old displays are in use, apple’s New panels use. If you must use two monitors, you may get a video card with double head functionality, which divides the display across the two screens. A computer with dual PCIe-enabled dual-head video cards may theoretically handle four monitors.

In addition to the motherboard and display connectors, certain graphics cards include:

Some cards include TV tuners as well. Following that, we’ll look at how to select a graphical fidelity card.

For more visit http://blogports.com/